My log files are getting too large to review and I want to move to a fixed file size log policy.

However I wonder how will I then locate the right log file to check. Is there a naming convention or a search query that would allow me to find a specific point in time in a fixed file size log policy?

Otherwise, is there a way for me to generate a separate log file per method or per logger name?

In general, I am wondering what is the best way to use logging for error handling, it would be great to see a YouTube video on this.

Log files are flat files. Searching in a file would be done using any text editor. I am pretty sure there are plenty of third-party tools that provide search capabilities for log files.

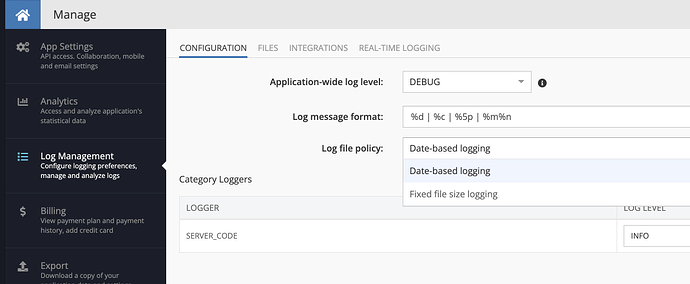

There are only 2 logging options in Backendless:

- Date-based logging

- Fixed file size logging

With the fixed file size, you can control the maximum size of the log file. When the file size reaches the limit, a new log file is created. Important: if the number of files becomes too big, it may result in problems with retrieving directory listings. If you know you will have LOTS of files, do not use this option.

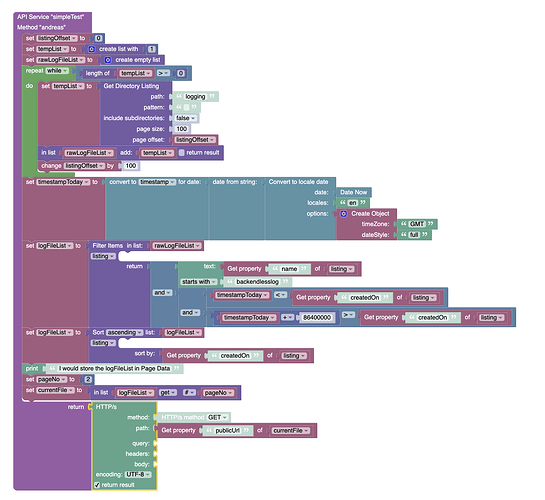

With fixed file size, how can I retrieve files created on or updated on a particular day from the UI. Do I need to pull the full listing of files in the logging folder and then search the timestamps, or is there a way to pull only the listings for a particular day?

Unfortunately, the directory listing API doesn’t provide a filtering function to get files for a timeframe. Your only option is get file listing and process the timestamps.

Regards,

Mark

Ok, for anyone else looking at this, here’s what I did: